Introduction

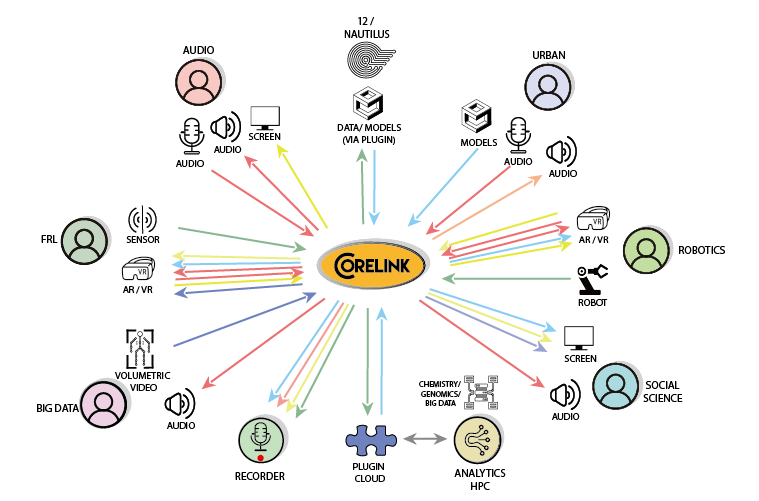

What is Corelink

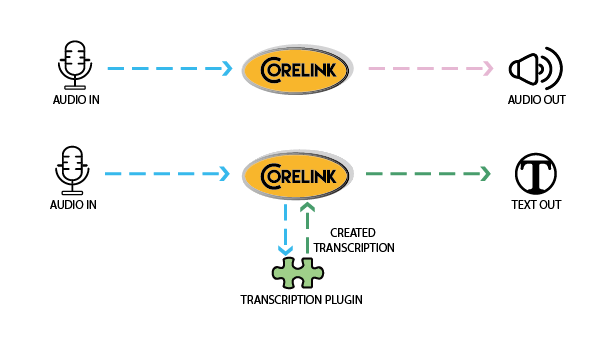

Corelink is a communication framework for real-time research applications, enabling efficient, low-latency data exchange between producers and consumers across platforms, with support for plugins to manipulate data on the fly.

With Corelink, you can easily organize and connect streams by type, either manually or programmatically, and configure plugins to apply processing or filters to streams on the server.

You can read more about Corelink's architecture or get started with one of our client libraries now.

Contributing to Corelink

Corelink is open-source, distributed under the MIT license. Check out the code on GitLab or learn how to contribute.

Corelink is developed by the High Speed Research Network team at New York University. Learn more about the team or contact us here.

Sample use-cases

Corelink is meant to accomodate a wide variety of use-cases and applications, in a single location or across the internet.

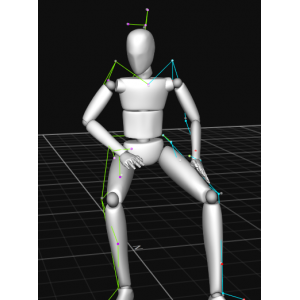

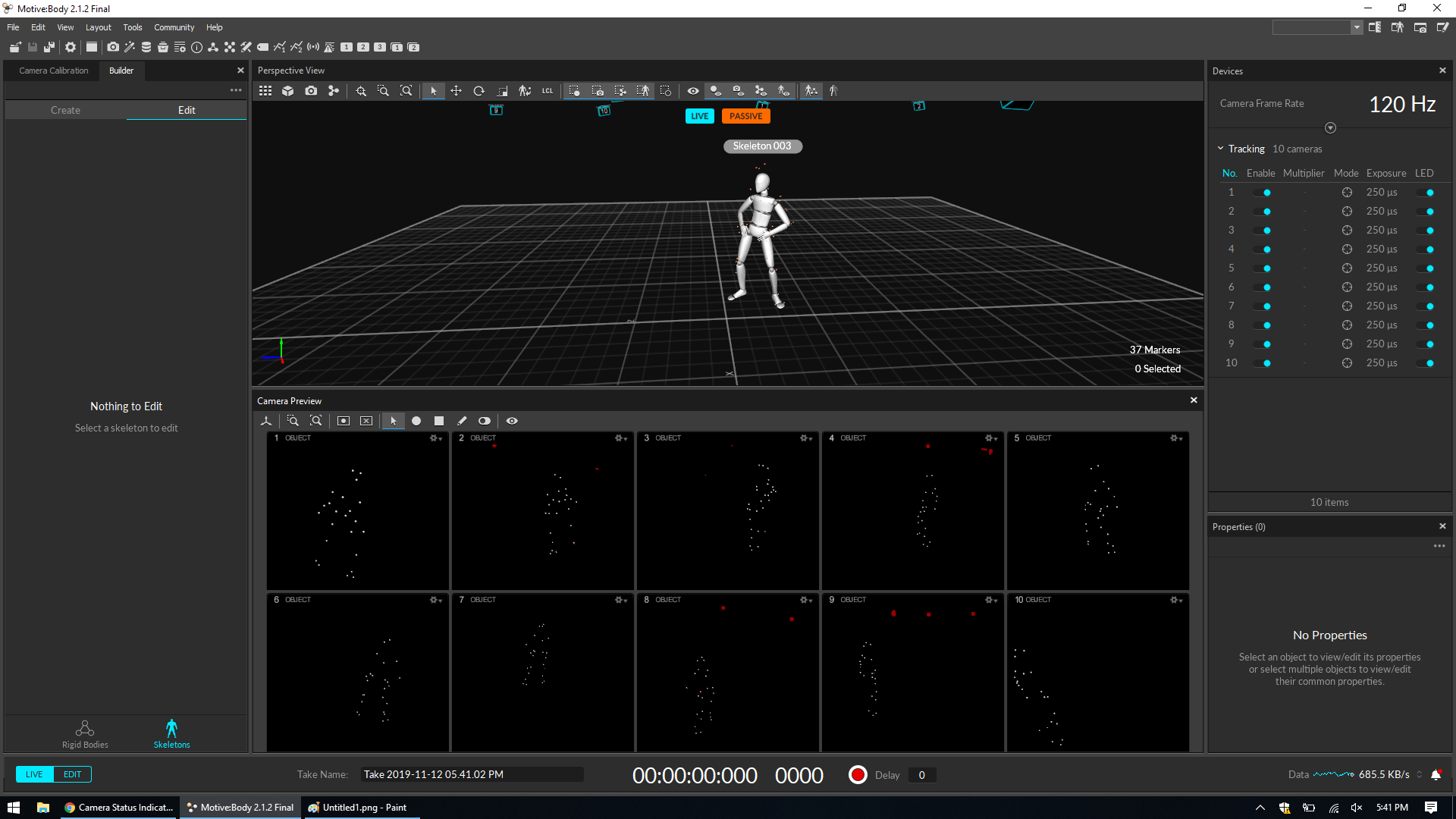

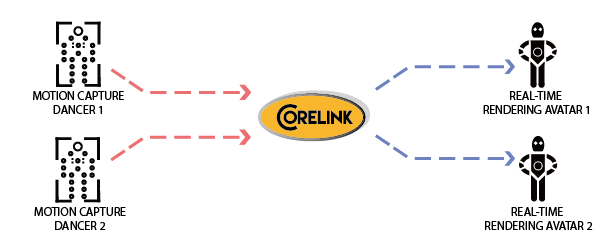

Motion capture streaming

One of the original applications of Corelink was to stream motion capture data from one computer to another using a UDP connection. This was used for several Concerts on the Holodeck using the OptiTrack system.

Concerts on the Holodeck

This performance was in April 2018 hosted by the Music Technology department at NYU Steinhardt, spearheaded by Agnieszka Roginska. This piece is "Take the A Train." The trumpet player, pianist, and two dancers were in the Loewe theater, along with the audience. The drummer, bassist, and two other dancers were in the Dolan recording studio, on the 6th floor of the same building. The two dancers in the Dolan recording studio were wearing motion capture suits, and their movements were rendered in real-time as avatars displayed on the screen in Loewe. The rest of the concert was also distributed with performers split between Dolan and Loewe.

In October 2018, NYU Steinhardt hosted a concert on the Holodeck as a part of the AES 2018 NYC convention. This performance was a collaboration with NYU Steinhardt Music Technology, NYU Courant FRL, Universidad Nacional De Quilmes from Argentina, Norwegian University of Science and Technology, and Ozark Henry. The dancers were at the FRL and their motion capture was streamed live via Corelink.

Corelink has also been used for different motion capture systems, such as the HTC Vive.

Facial Capture Streaming

In a later Holodeck concert, we used iClone and LIVE Face for facial capture streaming using TCP connections.

An iPhone was placed in front of a singer with the LIVE Face app. A separate computer was running a program to route the data from LIVE Face to Corelink to the receiver. The receiver rendered the facial capture data using iClone.

Unity + Corelink

There is a C# client that was designed to interface with Corelink to enable any real-time data streaming. These videos are a demo for a multiplayer game where a player's positional data and rotational data are streamed to another person.

Audio streaming

We have audio streaming capability using WebSockets and UDP connections to stream data from one person to another. This is currently done using C++, Unity, and WebSockets. Our C++ client is currently called CoreTrip and can send audio in real time. This is a video that uses CoreTrip and Unity:

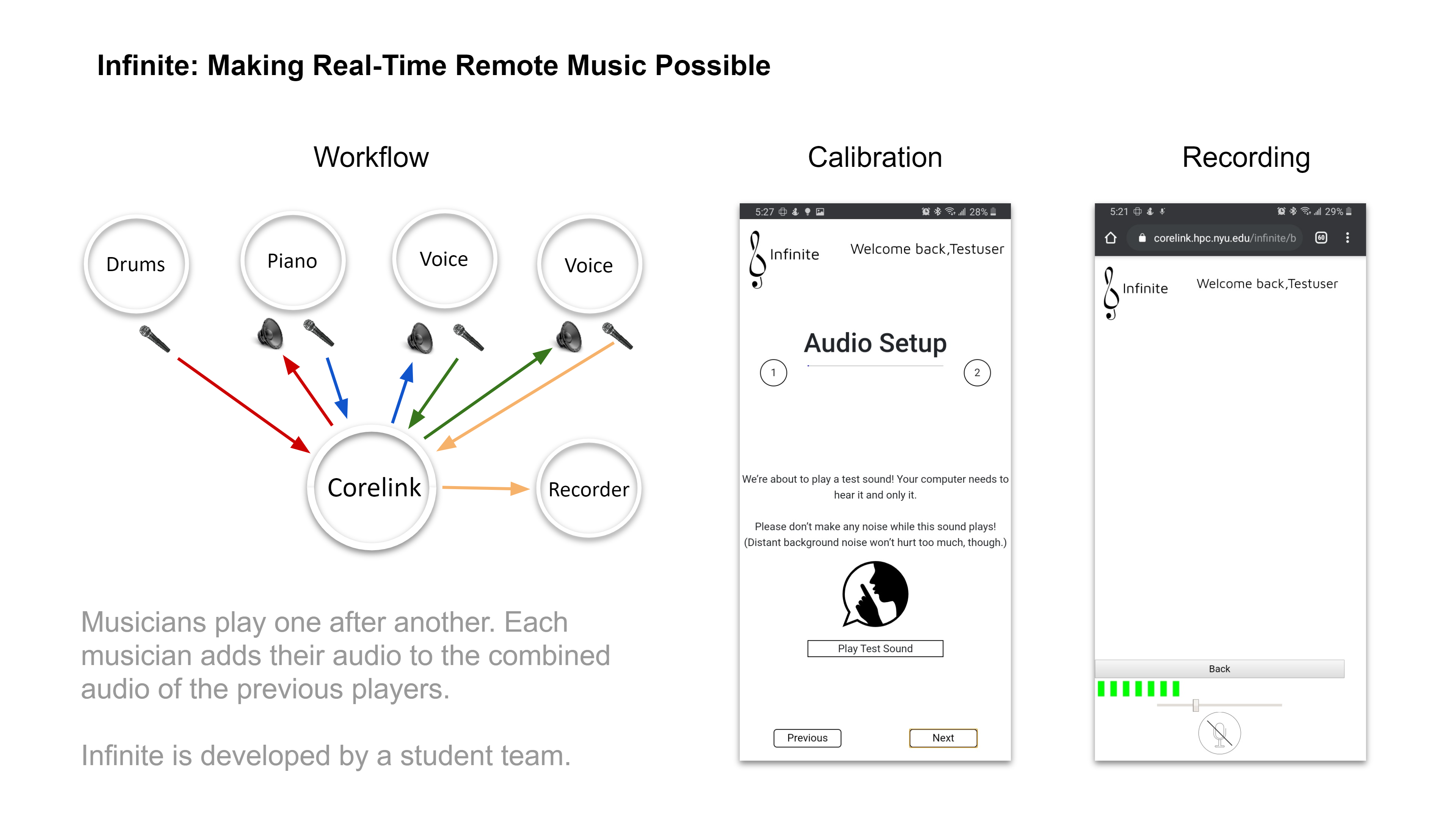

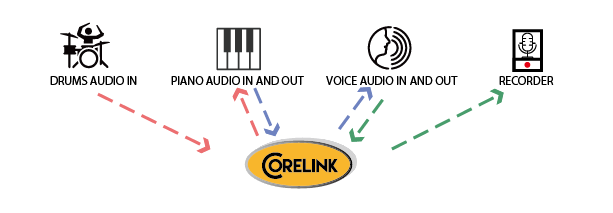

Infinite

Infinite is a way to stream audio over the browser and let musicians collaborate.

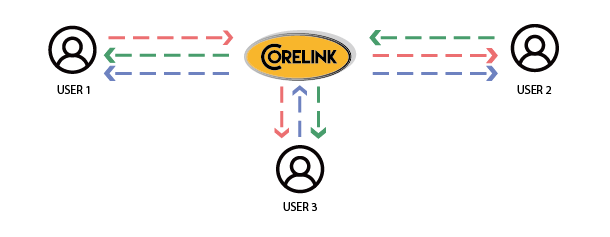

Vive Avatar

Another focus is on sending 3D data, such as avatar tracking data, audio data, such as voice chat, and affective data, such as heart rate sensors.

Using these capabilities,we have constructed a virtual conference room. Users wearing tracked markers, like Vive trackers, can send their 3D data to the relay, which then sends the data to a plugin to construct an avatar using an IK system similar to Holojam. This avatar data is forwarded to all other members of the virtual conference room, and the avatar is rendered. Additionally, we forward audio data in a similar matter, combining it with the head pose to correctly position the spatialized audio source at the user’s head. In this way, multiple people can sit in the same virtual space with limited motion and speak with each other.

![]()

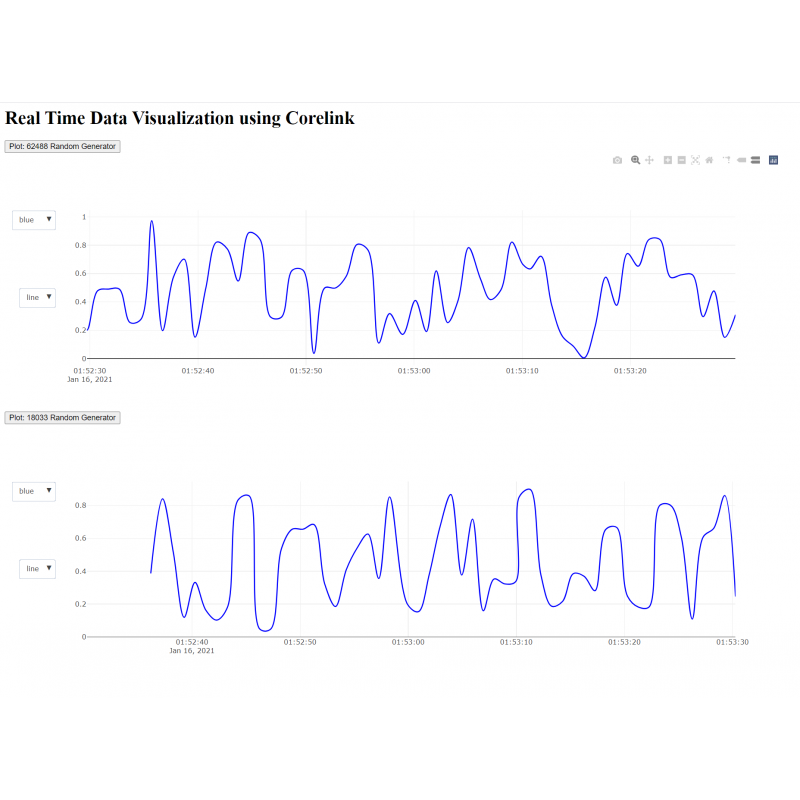

Real-Time Sensor Data Representation on Browser

One significant application of Corelink involves the graphical representation of sensor data on browsers. Corelink's low latency network framework enables the transfer of data from the client to the server and are plotted as live graphical data on browsers. Currently we have represented temperature and ultrasonic sensors on the graph. Clients can use any sensor available to them. This application is vital as sensor data from various sources including those from augmented environments can be represented and monitored graphically on browsers. The graph can be viewed as lines and bars with provisions to change colors. It can be zoomed in, zoomed out, and downloaded as a png file.